SSIS is the successor to Microsoft SQL Server DTS. To that end, it is very much an ETL (extract, transform and load) tool, however with the new product, there are several new possibilities that were either untenable or inpractical to implement before.

I am by no means a data warehouse or business intellegence expert and would defintely consider myself a generalist on related topics, but as a system developer/archtiect, addressing ETL is sometimes part of the job. Historically, I've pretty much glazed over in conversations about star schemas, normilization, facts and dimensions as my world is largely OLTP based. That said, I am quite impressed by SSIS and I'll explain why.

In previous versions of DTS, the engine itself was coupled to SQL Server. This was before the dawn of .NET and the idea of hosted environments and runtimes were still quite distant. To make a long story short, you developed DTS packages on SQL Server and deployed to SQL Server. Data sources and transformations were very tightly bound to the package itself and to the security context of the SQL Server on which the DTS package ran. Although you could drop down to a command line, remote execution was either ardous or impractical due to the severe lack of reliability and the reason was simple- classic DTS was meant to run in the context of the SQL Server that "hosted" it. As a result, packages were normally scheduled through SQL Server Agent jobs and life was good.

A fantastic way to introduce SSIS concepts would be to make analogies to BizTalk Server, because in many ways, it is quite obvious that the two teams talked during development. Everything from the fully integrated Visual Studio 2005 integration to the really slick design surface and constituent shapes seem very much influenced by BTS. Understanding that I may have already lost a large majority of readers in this analogy, I'll leave it at this: SSIS is very much to the database layer what BTS is the middle tier. Fair enough? Good.

Not so fast. There's no way I'm getting away with that, so let's go into some details.

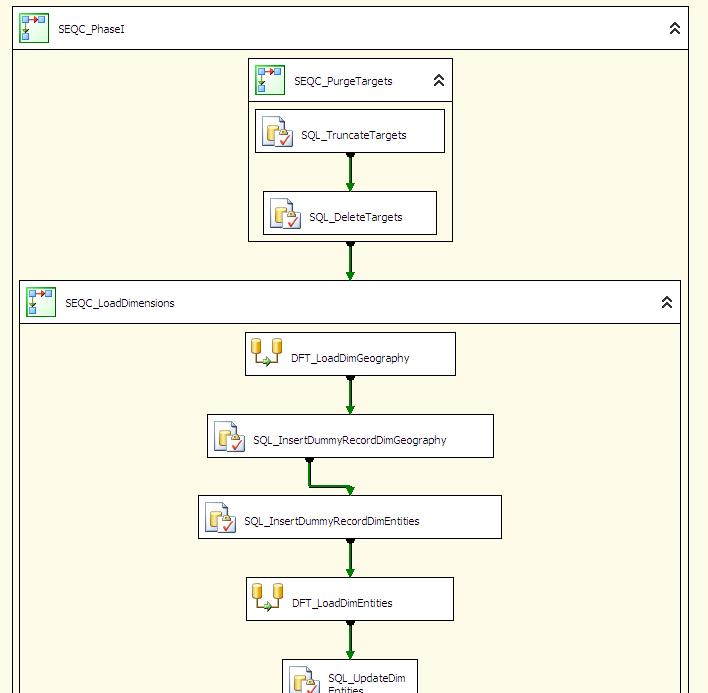

I already mentioned the design and development experience. Whethere your using Visual Studio 2004 SSIS projects or BIDS (Business Intellegence Design Studio), the design time experience is pretty sweet. Scope/boundaries are represented as Sequence Containers and Tasks are the drivers for the overall logic of the package. For example, a Sequence Container will contain Tasks and each Task is linked to other Tasks via precedence constraints. While this concept existed in DTS, it was largely brain dead and confusing. In SSIS, you drag a Task constraint from the predecesor to the contingent task and a green arrow intuitevely reflects this relationship. Precedence constraints can also be established between Sequence Containers, and the result of this apprach is reflected in the following diagram which represents the Control Flow of the package logic:

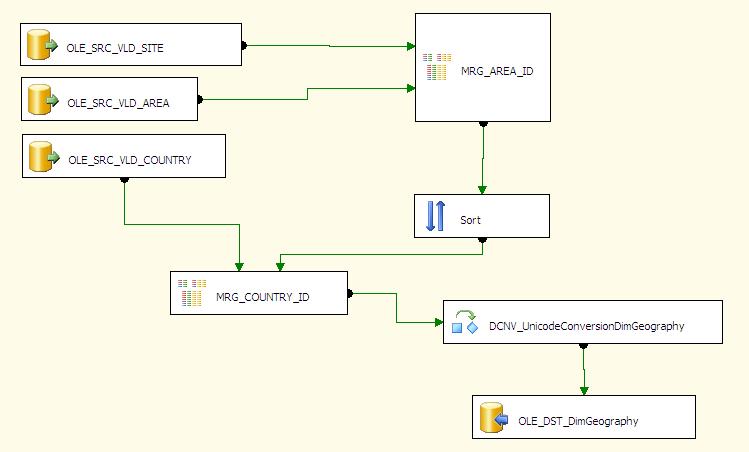

A major distinguishing factor between SSIS and DTS is the explicit seperation of logic flow from data manipulation, or Data Flow. The Data Flow Task, which is a part of the Control Flow, seeds the Data Flow, which is the sequencing of ETL tasks at a finer grained level.

These Data Flow tasks are all about transformation, and with the introduction of ADO.NET-like DataSets, a new emphasis is placed on working with data from in-memory data streams. Like its predecessor, one of the big strengths here is the ability to define data sources on different machines, and this is now the role of the Connection Manager. What is fantastic about the Connection Manager model, however, is the ability to configure the connections and deployment time via a facility known as SSIS Package Configurations. This is really an excellent approach to addressing the deployment problem of targetting different servers and is a great pattern that could very well be applied to ASP.NET as well.

Back to Data Flow. In addition to Transformation Tasks and Data Sources (which reference Connection Managers), it should come as no surprise that SSIS handles XML input and SOAP web service interaction naturally. In addition, messaging via MSMQ is also natively supported. This is really where the overlap (at a purely conceptual level) with BTS comes in. SSIS Packages can be deployed to any SQL Server 2005 machine which really acts as a host. Once you deploy the package to its host, you can run the package literally from anywhere, either interactively or programatically. So in a sense, SSIS really becomes a service which waits to be invoked, only its concerns lie more on the database side, particularly in ETL.

It should be noted as well that as a CLR host, SQL Server facilitates the loading of managed assemblies from within SSIS packages. This is valuable, for instance, when you want to call into a managed .NET DAL that is perhaps exposed via a SOAP web service which returns a dataset or a datareader. Why not? SSIS natively understands ADO.NET data transfer objects. The potential for abuse here should be evident: maintaining a seperation of concerns between business tiers and data tiers is essential to avoid spaghetti architectures.

Let's wrap up by looking at a practical example. An ASP.NET application provides the front end for an adminstration console which is used to refresh a data mart with OLTP data on demand. The ASP.NET application connects to a WCF service which in turn invokes the SSIS API and executes the package remotely. In this scenario, the machines are truly distributed. The ASP.NET application is hosted on an IIS 6 Server and the WCF service may be hosted on a seperate IIS server although both on the same machine is possible as well. Where things get interesting is in the fact that the source OLTP and target Data Mart are very likely on different machines, and the OLTP data could very well come from several disperate sources on different machines.

In this scenario, there are a few things to consider.

First, the ASP.NET client is completely decoupled from the WCF service and underlying SSIS API calls. All the client knows of the service is the address, binding and contract.

Second, the WCF service wraps the call to the SSIS API, completely isolating all aspects of the transaction from the client.

Third, the WCF service interacts with the SSIS package as a service- the only known information is the package name and host server. The service doesn't necessarily need to know or care what SQL Servers are being targetted by the ETL process- this is all encapsulated by the SSIS package.

Optionally, of course, the service could pass specific parameters in from the ASP.NET client, instructing the SSIS package to manage its Connection Managers at runtime. This is a truly powerful concept where servers may vary from environment to environment (i.e. Dev, Test, Prod) or in large enterprises where servers are constantly upgraded, changed and moved around.

Based on this .NET paradigm, all of the existing rules for design and security apply. Specifically, from a security perspective, using a fixed identity to flow the call from the ASP.NET client to the WCF service, and from the WCF service to the SSIS host is required. Impersonation simply won't work unless you are delegating which is undesirable in most enterprise scenarios.

SSIS very much fits nicely into the .NET architecture stack, and like BizTalk Server can be wraped in descrete services to maximize its ability to perform serious heavy lifting while leaving a small footprint on the system-level design.

In closing, SSIS has very much come of age and for the most part, has been a pleasure to work with- even as a DW generalist ;-)

In a future post, I'll walk through how to accomplish the described scenario step by step and this may well turn in to future speaking topic- let me know what you think!